The other day, we went to a designer's fashion shop whose owner was

rather adamant that he was never ever going to wear a face mask, and

that he didn't believe the COVID-19 thing was real. When I argued for

the opposing position, he pretty much dismissed what I said out of hand,

claiming that "the hospitals are empty dude" and "it's all a lie". When

I told him that this really isn't true, he went like "well, that's just

your opinion". Well, no -- certain things are facts, not opinions. Even

if you don't believe that this disease kills people, the idea that this

is a matter of opinion is missing the ball by so much that I was pretty

much stunned by the level of ignorance.

His whole demeanor pissed me off rather quickly. While I disagree with

the position that it should be your decision whether or not to wear a

mask, it's certainly possible to have that opinion. However, whether or

not people need to go to hospitals is not an opinion -- it's something

else entirely.

After calming down, the encounter got me thinking, and made me focus on

something I'd been thinking about before but hadn't fully forumlated:

the fact that some people in this world seem to misunderstand the nature

of what it is to do science, and end up, under the claim of being

"sceptical", with various nonsense things -- see scientology, flat earth

societies, conspiracy theories, and whathaveyou.

So, here's something that might (but probably won't) help some people

figuring out stuff. Even if it doesn't, it's been bothering me and I

want to write it down so it won't bother me again. If you know all this

stuff, it might be boring and you might want to skip this post.

Otherwise, take a deep breath and read on...

Statements are things people say. They can be true or false; "the

sun is blue" is an example of a statement that is trivially false. "The

sun produces light" is another one that is trivially true. "The sun

produces light through a process that includes hydrogen fusion" is

another statement, one that is a bit more difficult to prove true or

false. Another example is "Wouter Verhelst does not have a favourite

color". That happens to be a true statement, but it's fairly difficult

for anyone that isn't me (or any one of the other Wouters Verhelst out

there) to validate as true.

While statements can be true or false, combining statements without more

context is not always possible. As an example, the statement "Wouter

Verhelst is a Debian Developer" is a true statement, as is the statement

"Wouter Verhelst is a professional Volleybal player"; but the statement

"Wouter Verhelst is a professional Volleybal player and a Debian

Developer" is not, because while I am a Debian Developer, I am not a

professional Volleybal player -- I just happen to share a name with

someone who is.

A statement is never a fact, but it can

describe a fact. When a

statement is a true statement, either because we trivially know what it

states to be true or because we have performed an experiment that

proved beyond any possible doubt that the statement is true, then what

the statement describes is a fact. For example, "Red is a color" is a

statement that describes a fact (because, yes, red is definitely a

color, that is a fact). Such statements are called statements of

fact. There are other possible statements. "Grass is purple" is a

statement, but it is not a statement of fact; because as everyone knows,

grass is (usually) green.

A statement can also describe an opinion. "The Porsche 911 is a nice

car" is a statement of opinion. It is one I happen to agree with, but it

is certainly valid for someone else to make a statement that conflicts

with this position, and there is nothing wrong with that. As the saying

goes, "opinions are like assholes: everyone has one". Statements

describing opinions are known as statements of opinion.

The differentiating factor between facts and opinions is that facts are

universally true, whereas opinions only hold for the people who state

the opinion and anyone who agrees with them. Sometimes it's difficult or

even impossible to determine whether a statement is true or not. The

statement "The numbers that win the South African Powerball lottery on

the 31st of July 2020 are 2, 3, 5, 19, 35, and powerball 14" is not a

statement of fact, because at the time of writing, the 31st of July 2020

is in the future, which at this point gives it a 1 in 24,435,180 chance

to be true). However, that does not make it a statement of opinion; it

is not my

opinion that the above numbers will win the South African

powerball; instead, it is my

guess that those numbers will be correct.

Another word for "guess" is hypothesis: a hypothesis is a statement

that may be universally true or universally false, but for which the

truth -- or its lack thereof -- cannot currently be proven beyond doubt.

On Saturday, August 1st, 2020 the above statement about the South

African Powerball may become a statement of fact; most likely however,

it will instead become a false statement.

An unproven hypothesis may be expressed as a matter of belief. The

statement "There is a God who rules the heavens and the Earth" cannot

currently (or ever) be

proven beyond doubt to be either true or false,

which by definition makes it a hypothesis; however, for matters of

religion this is entirely unimportant, as for believers the belief that

the statement is correct is all that matters, whereas for nonbelievers

the truth of that statement is not at all relevant. A belief is not an

opinion; an opinion is not a belief.

Scientists do not deal with unproven hypotheses, except insofar that

they attempt to prove, through direct observation of nature (either out

in the field or in a controlled laboratory setting) that the hypothesis

is, in fact, a statement of fact. This makes unprovable hypotheses

unscientific -- but that does

not mean that they are false, or even

that they are uninteresting statements. Unscientific statements are

merely statements that science cannot either prove or disprove, and that

therefore lie outside of the realm of what science deals with.

Given that background, I have always found the so-called "conflict"

between science and religion to be a non-sequitur. Religion deals in one

type of statements; science deals in another. The do not overlap, since

a statement can either be proven or it cannot, and religious statements

by their very nature focus on unprovable belief rather than universal

truth. Sure, the range of things that science has figured out the facts

about has grown over time, which implies that religious statements have

sometimes been proven false; but is it heresy to say that "animals exist

that can run 120 kph" if that is the truth, even if such animals don't

exist in, say, Rome?

Something very similar can be said about conspiracy theories. Yes, it is

possible to hypothesize that NASA did not send men to the moon, and that

all the proof contrary to that statement was somehow fabricated.

However, by its very nature such a hypothesis cannot be proven or

disproven (because the statement states that

all proof was

fabricated), which therefore implies that it is an unscientific

statement.

It is good to be sceptical about what is being said to you. People can

have various ideas about how the world works, but only one of those

ideas -- one of the possible hypotheses -- can be true. As long as a

hypothesis remains unproven, scientists

love to be sceptical

themselves. In fact, if you can somehow prove beyond doubt that a

scientific hypothesis is false, scientists will love you -- it means

they now know something more about the world and that they'll have to

come up with something else, which is a lot of fun.

When a scientific experiment or observation proves that a certain

hypothesis is true, then this

probably turns the hypothesis into a

statement of fact. That is, it is of course possible that there's a flaw

in the proof, or that the experiment failed (but that the failure was

somehow missed), or that no observance of a particular event happened

when a scientist tried to observe something, but that this was only

because the scientist missed it. If you can show that any of those

possibilities hold for a scientific proof, then you'll have turned a

statement of fact back into a hypothesis, or even (depending on the

exact nature of the flaw) into a false statement.

There's more. It's human nature to want to be rich and famous, sometimes

no matter what the cost. As such, there

have been scientists who have

falsified experimental results, or who have claimed to have observed

something when this was not the case. For that reason, a scientific

paper that gets written after an experiment turned a hypothesis into

fact describes not only the results of the experiment and the observed

behavior, but also the

methodology: the way in which the experiment

was run, with enough details so that

anyone can retry the experiment.

Sometimes that may mean spending a large amount of money just to be able

to run the experiment (most people don't have an LHC in their backyard,

say), and in some cases some of the required materials won't be

available (the latter is expecially true for, e.g., certain chemical

experiments that involve highly explosive things); but the information

is always

there, and if you spend enough time and money reading

through the available papers, you will be able to independently prove

the hypothesis yourself. Scientists tend to do just that; when the

results of a new experiment are published, they will try to rerun the

experiment, partially because they want to see things with their own

eyes; but partially also because if they can find fault in the

experiment or the observed behavior, they'll have reason to write a

paper of their own, which will make them a bit more rich and famous.

I guess you could say that there's three types of people who deal with

statements: scientists, who deal with provable hypotheses and

statements of fact (but who have no use for unprovable hypotheses and

statements of opinion); religious people and conspiracy theorists,

who deal with unprovable hypotheses (where the religious people deal

with these to serve a large cause, while conspiracy theorists

only

care about the unprovable hypotheses); and politicians, who should

care about proven statements of fact and

produce statements of

opinion, but who usually attempt the reverse of those two these days

Anyway...

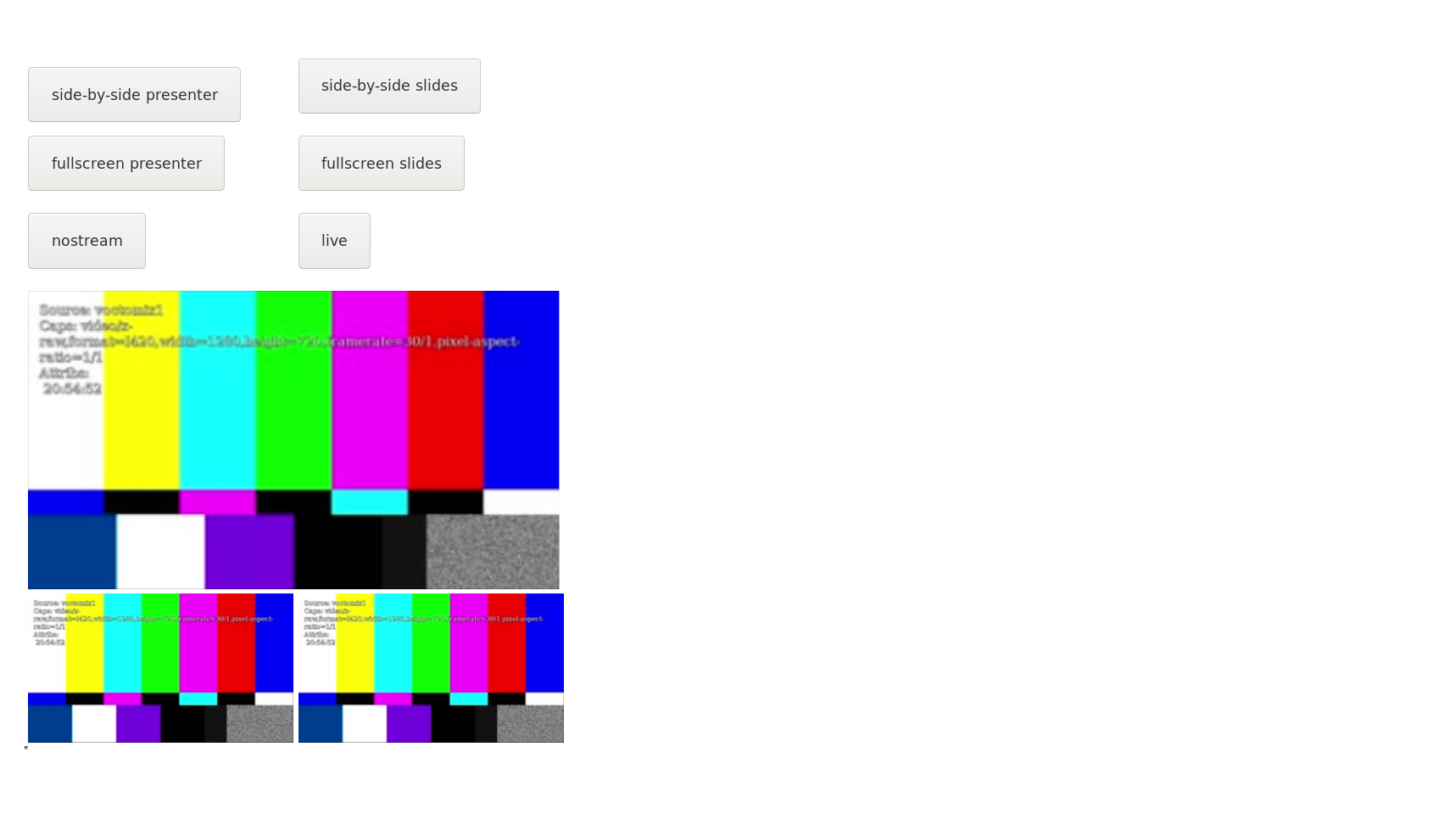

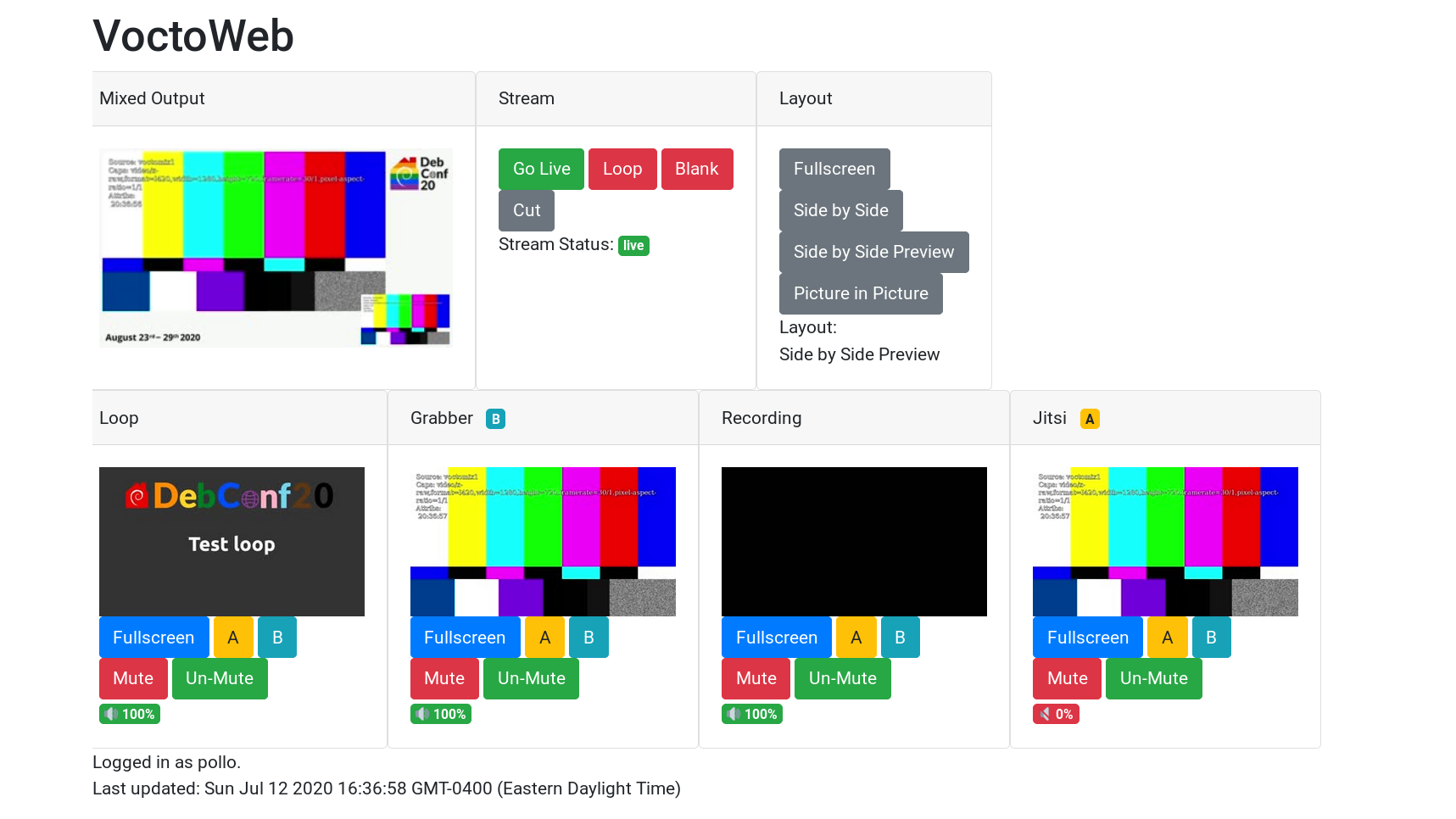

[[!img

Error: Image::Magick is not installed]]

... isn't ready yet, but it's getting there.

I had planned to release a new version of SReview, my online video review and

transcoding system that I wrote originally for FOSDEM but is being used for

DebConf, too, after it was set up and running properly for FOSDEM 2020.

However, things got a bit busy (both in my personal life and in the world at

large), so it fell a bit by the wayside.

I've now also been working on things a bit more, in preparation for an

improved administrator's interface, and have started implementing a REST

API to deal with talks etc through HTTP calls. This seems to be coming

along nicely, thanks to

OpenAPI and the Mojolicious

plugin for parsing

that. I can now

design the API nicely, and autogenerate client side libraries to call

them.

While at it, because libmojolicious-plugin-openapi-perl isn't available

in Debian 10 "buster", I moved the docker

containers

over from stable to testing. This revealed that both

bs1770gain and

inkscape changed their command

line incompatibly, resulting in me having to work around those

incompatibilities. The good news is that I managed to do so in a way

that keeps running SReview on Debian 10 viable, provided one installs

Mojolicious::Plugin::OpenAPI from CPAN rather than from a Debian

package. Or installs a backport of that package, of course. Or, heck,

uses the Docker containers in a kubernetes environment or some such --

I'd love to see someone use that in production.

Anyway, I'm still finishing the API, and the implementation of that API

and the test suite that ensures the API works correctly, but progress is

happening; and as soon as things seem to be working properly, I'll do a

release of SReview 0.6, and will upload that to Debian.

Hopefully that'll be soon.

... isn't ready yet, but it's getting there.

I had planned to release a new version of SReview, my online video review and

transcoding system that I wrote originally for FOSDEM but is being used for

DebConf, too, after it was set up and running properly for FOSDEM 2020.

However, things got a bit busy (both in my personal life and in the world at

large), so it fell a bit by the wayside.

I've now also been working on things a bit more, in preparation for an

improved administrator's interface, and have started implementing a REST

API to deal with talks etc through HTTP calls. This seems to be coming

along nicely, thanks to

OpenAPI and the Mojolicious

plugin for parsing

that. I can now

design the API nicely, and autogenerate client side libraries to call

them.

While at it, because libmojolicious-plugin-openapi-perl isn't available

in Debian 10 "buster", I moved the docker

containers

over from stable to testing. This revealed that both

bs1770gain and

inkscape changed their command

line incompatibly, resulting in me having to work around those

incompatibilities. The good news is that I managed to do so in a way

that keeps running SReview on Debian 10 viable, provided one installs

Mojolicious::Plugin::OpenAPI from CPAN rather than from a Debian

package. Or installs a backport of that package, of course. Or, heck,

uses the Docker containers in a kubernetes environment or some such --

I'd love to see someone use that in production.

Anyway, I'm still finishing the API, and the implementation of that API

and the test suite that ensures the API works correctly, but progress is

happening; and as soon as things seem to be working properly, I'll do a

release of SReview 0.6, and will upload that to Debian.

Hopefully that'll be soon.

Anyway...

[[!img

Anyway...

[[!img

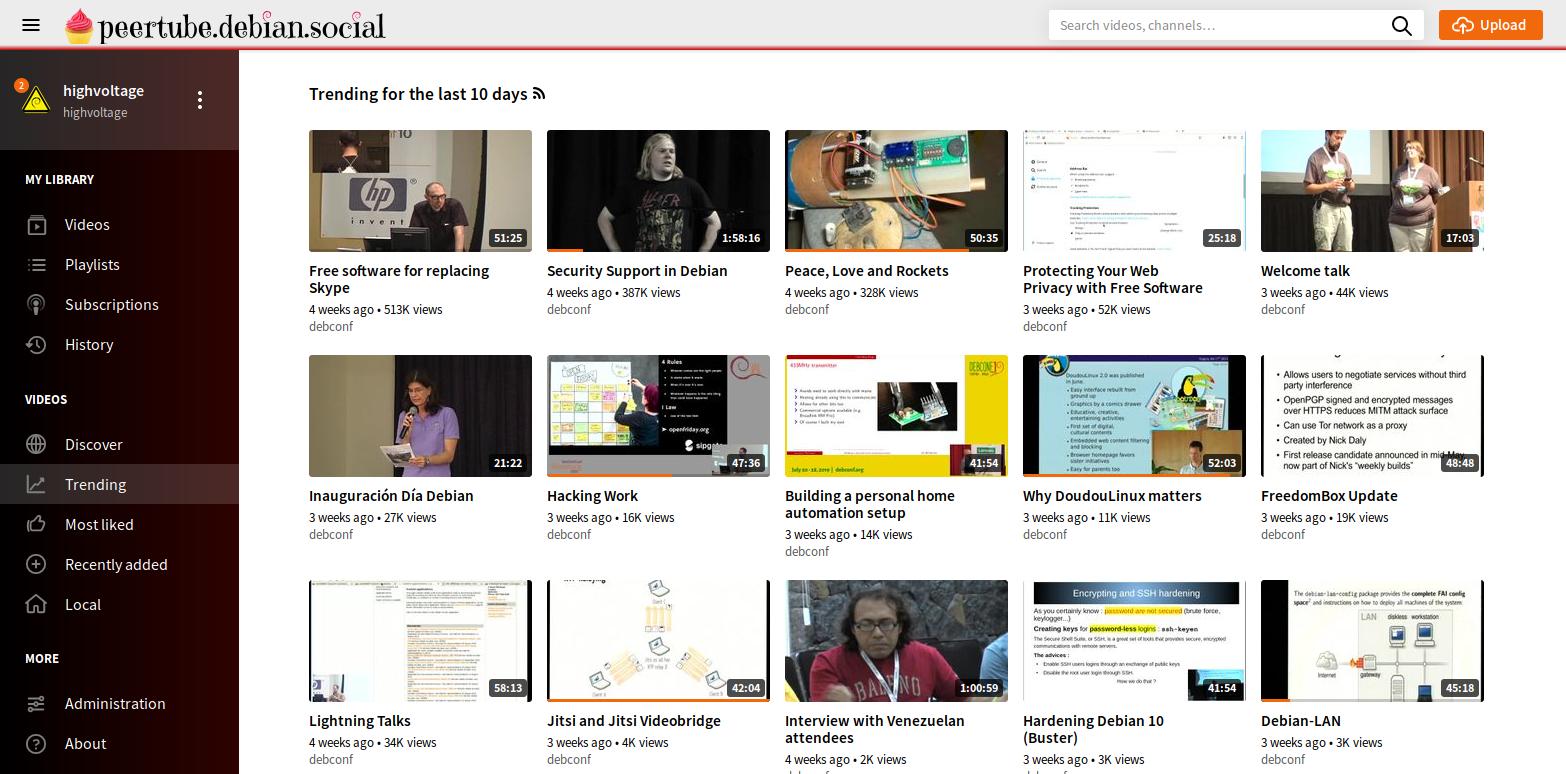

I know most Debian people know about this already But in case you

don t follow the usual Debian communications channels, this might

interest you!

Given most of the world is still under COVID-19 restrictions, and that

we want to work on Debian, given there is no certainty as to what the

future holds in store for us Our DPL fearless as they always are

had the bold initiative to make this weekend into the first-ever

I know most Debian people know about this already But in case you

don t follow the usual Debian communications channels, this might

interest you!

Given most of the world is still under COVID-19 restrictions, and that

we want to work on Debian, given there is no certainty as to what the

future holds in store for us Our DPL fearless as they always are

had the bold initiative to make this weekend into the first-ever

So, we are already halfway through DebCamp (which means, you can come

and hang out with us in the

So, we are already halfway through DebCamp (which means, you can come

and hang out with us in the

Wouter s tasks

Wouter s tasks Wouter s props, needed to complete his tasks

Wouter s props, needed to complete his tasks Bike tour leg at Cape Town Stadium.

Bike tour leg at Cape Town Stadium. Seeking out 29 year olds.

Seeking out 29 year olds. Wouter finishing his lemon and actually seemingly enjoying it.

Wouter finishing his lemon and actually seemingly enjoying it. Reciting South African national anthem notes and lyrics.

Reciting South African national anthem notes and lyrics. The national anthem, as performed by Wouter (I was actually impressed by how good his pitch was).

The national anthem, as performed by Wouter (I was actually impressed by how good his pitch was).

Accommodation at the lodge

Accommodation at the lodge Debian swirls everywhere

Debian swirls everywhere I took a canoe ride on the river and look what I found, a paddatrapper!

I took a canoe ride on the river and look what I found, a paddatrapper!

A bit of digital zoomage of previous image.

A bit of digital zoomage of previous image. Time to say the vows.

Time to say the vows. Just married. Thanks to Sue Fuller-Good for the photo.

Just married. Thanks to Sue Fuller-Good for the photo. Except for one character being out of place, this was a perfect fairy tale wedding, but I pointed Wouter to

Except for one character being out of place, this was a perfect fairy tale wedding, but I pointed Wouter to